SBERT.net - Sentence Transformers documentation

Sentence Transformers (a.k.a. SBERT) is the go-to Python module for accessing, using, and training state-of-the-art embedding and reranker models. It can be used to compute …

Pretrained Models — Sentence Transformers documentation

Pretrained Models We provide various pre-trained Sentence Transformers models via our Sentence Transformers Hugging Face organization. Additionally, over 6,000 community …

Quickstart — Sentence Transformers documentation - SBERT.net

Quickstart Sentence Transformer Characteristics of Sentence Transformer (a.k.a bi-encoder) models: Calculates a fixed-size vector representation (embedding) given texts or images. …

Training Overview — Sentence Transformers documentation

Training Overview Why Finetune? Finetuning Sentence Transformer models often heavily improves the performance of the model on your use case, because each task requires a …

Sentence Transformers: Multilingual Sentence, Paragraph, and …

We provide an increasing number of state-of-the-art pretrained models for more than 100 languages, fine-tuned for various use-cases. Further, this framework allows an easy fine …

Installation — Sentence Transformers documentation - SBERT.net

Installation We recommend Python 3.10+, PyTorch 1.11.0+, and transformers v4.41.0+. There are 5 extra options to install Sentence Transformers: Default: This allows for loading, saving, and …

Cross-Encoders — Sentence Transformers documentation

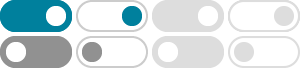

Cross-Encoders SentenceTransformers also supports to load Cross-Encoders for sentence pair scoring and sentence pair classification tasks. Cross-Encoder vs. Bi-Encoder First, it is …

Semantic Textual Similarity - SBERT.net

Semantic Textual Similarity For Semantic Textual Similarity (STS), we want to produce embeddings for all texts involved and calculate the similarities between them. The text pairs …

Natural Language Inference - SBERT.net

The SoftmaxLoss as used in our original SBERT paper does not yield optimal performance. A better loss is MultipleNegativesRankingLoss, where we provide pairs or triplets.

Usage — Sentence Transformers documentation - SBERT.net

Usage Characteristics of Sentence Transformer (a.k.a bi-encoder) models: Calculates a fixed-size vector representation (embedding) given texts or images. Embedding calculation is often …